Why Does OAK Exist?

In trying to solve an Embedded Spatial AI problem, we discovered that although the perfect chip existed, there was no platform (hardware, firmware, or software) which allowed the chip to be used to solve such an Embedded Spatial AI problem.

So we built the platform.

Last updated: July 14, 2020 10:36

What is SpatialAI? What is 3D Object Localization?

First, it is necessary to define what ‘Object Detection’ is: It is the technical term for finding the bounding box of an object of interest, in pixel space (i.e. pixel coordinates), in an image.

3D Object Localization (or 3D Object Detection), is all about finding such objects in physical space, instead of pixel space. This is useful when trying to real-time measure or interact with the physical world.

Last updated: July 14, 2020 10:38

Why the differing Spatial AI Modes?

It is worth noting that monocular neural inference fused with stereo depth is possible for networks like facial-landmark detectors, pose estimators, etc. that return single-pixel locations (instead of for example bounding boxes of semantically-labeled pixels), but stereo neural inference is advised for these types of networks better results as unlike object detectors (where the object usually covers many pixels, typically hundreds, which can be averaged for an excellent depth/position estimation), landmark detectors typically return single-pixel locations. So if there doesn’t happen to be a good stereo-disparity result for that single pixel, the position can be wrong.

And so running stereo neural inference excels in these cases, as it does not rely on stereo disparity depth at all, and instead relies purely on the results of the neural network, which are robust at providing these single pixel results. And triangulation of the parallel left/right outputs results in very-accurate real-time landmark results in 3D space.

Last updated: July 14, 2020 10:42

How hard is it to get OAK running from scratch? What Platforms are Supported?

Not hard. Usually OAK is up/running on your platform within a couple minutes (most of which is download time).

The requirements are Python and OpenCV (which are great to have on your system anyway!).

Raspbian, Ubuntu, macOS, Windows, and many others are supported and are easy to get up/running. And since the host API is open source, you can build it for other systems with ease. We’ve already had customers build this for Linux distros we hadn’t even heard of!

It’s a matter of minutes to be up and running with the power of Spatial AI, on the platform of your choice. Below is DepthAI running on my Mac.

Last updated: July 14, 2020 10:58

Are OAK-D and OAK-1 easy to use with Raspberry Pi?

Very. It’s designed for ease of setup and use, and to keep the Pi CPU not-busy. Our documentation (including videos!) will get you up and running in seconds.

Last updated: July 14, 2020 10:58

Can I use multiple OAK-D and/or OAK-1 with one host?

Yes. OAK is architected to put as-little-as-possible burden on the host. So even with a Raspberry Pi you can run a handful of OAK-D and/or OAK-1 with the Pi and not burden the Pi CPU. It is purely limited by the number of USB ports on the host.

Last updated: July 14, 2020 10:58

Can I train my own Models for OAK?

Yes! We have tutorials around Google Colab notebooks you can even use for this. So you can even train for free. We have tutorials for MobileNetSSDv1, MobileNetSSDv2, tinyYOLOv3, and soon YOLOv4.

Last updated: July 14, 2020 10:58

Do I need Depth data to train my own custom Model for OAK-D?

No.

That’s the beauty of OAK-D. It takes standard object detectors (2D, pixel space) and fuses these neural networks with stereo disparity depth to give you 3D results in physical space.

Now, could you train a model to take advantage of depth information? Yes, and it would likely be even more accurate than the 2D version. To do so, record all the streams (left, right, and color) and retrain on all of those (which would require modifying the front-end of say MobileNet-SSD to allow 5 layers instead of 3 (1 for each grayscale, 3 for the color R, G, B).

Last updated: July 14, 2020 10:59

If I train my own network, which Neural Operations are supported by OAK?

See the VPU section here: https://docs.openvinotoolkit.org/latest/_docs_IE_DG_supported_plugins_Supported_Devices.html

Anything that’s supported there under VPU will work on DepthAI. It’s worth noting that we haven’t tested all of these permutations though.

Last updated: July 14, 2020 10:59

Has any thought been given to making this module easy to integrate into end user devices?

Yes. So OAK-D is based around a small, easy-to-integrate System on Module with a 100-pin modular connector. This allows fast integration into your product. And the OAK-D carrier board is open-source - so it’s not just a product - it’s also a reference design for your hardware.

The carrier board designs (including OAK-D, part-number = BW1098OBC) are already available here: https://github.com/luxonis/depthai-hardware

There are a variety of others as well, speeding integration for other use-cases/applications, including a variant with a built-in Raspberry Pi Compute Module, a Raspberry Pi HAT variant, and a version w/ modular stereo cameras for customizable stereo baseline.

Last updated: July 14, 2020 11:13

Can the SoM (System on Module) in OAK-D support interfaces other than USB?

The system on module included with the OAK-D is configured for USB boot - meaning the host loads loads everything to OAK-D and there is only minimal boot code and calibration data stored onboard. So the models in this KickStarter require a host computer (Linux, Windows, or Mac).

The System on Module has a 100-pin interface however (DF40C-100DP-0.4V(51)) that does support SPI, UART, and I2C. And we will be open-sourcing this interface protocol as well (just like we have for USB).

To use these interfaces, using one of our SoM variants that support other boot modes is required, which allows operation in systems that don’t have USB, and even microcontrollers with no operating system.

This variant of the SoM can be ordered to boot off of the following storage: 1. Onboard NOR flash 2. Onboard eMMC 3. SD-Card

Please reach out to us if these are of interest, and check out our coming reference design for this variant of the SoM, here: https://github.com/luxonis/depthai-hardware/issues/10.

Last updated: July 14, 2020 11:38

What is the interface for the OAK-D SoM?

The OAK-D SoM uses the DF40C-100DP-0.4V(51) to interface to the main board. This connector is what allows this embedding into actual products. It’s small, and fairly common for such integrations, including full MIPI rate support.

Last updated: July 14, 2020 11:38

Is a full -40C to 85C industrial range possible with OAK and OAK-D

This has not yet been qualified on OAK. Likely the hardest challenge will be the 85C limit, but is likely surmountable by integrating OAK directly into an enclosure so the enclosure acts as the heatsink.

The maximum die temperature was support is 105C, so this application is doable with careful thermal design of the enclosure.

Last updated: July 14, 2020 11:38

While I'm Waiting on Hardware Can I Start Learning How to Use the System? Studying Usage Examples/etc.?

Thanks for your pledge! And great question. Although much of this will be improved and additional functionality will exist by the time you receive your hardware, the FAQ here (https://docs.luxonis.com/faq/) will help get you up to speed on a slew of things.

And there are also tutorials on things like using pre-trained models (https://docs.luxonis.com/tutorials/openvino_model_zoo_pretrained_model/) and how to train your own (https://docs.luxonis.com/tutorials/object_det_mnssv2_training/). As part of hitting the $100k stretch goal, we will also be making videos covering each of these and more.

Last updated: July 14, 2020 12:00

What's the FoV on the OAK-D?

Color Camera Specifications: - IMX378 Image Sensor - 4K, 60 Hz Video (max 4K/30fps encoded h.265) - 12 MP Stills - 4056 x 3040 pixels - 81 DFOV° - 68.8 HFOV° - Lens Size: 1/2.3 inch - AutoFocus: 8 cm - ∞ - F-number: 2.0

Stereo Camera Specifications: - OV9282 Image Sensor - Synchronized Global Shutter - Excellent Low-light - 1280 x 720 depth - 83 DFOV° - 71.8 HFOV° - Lens Size: 1/2.3 inch - Fixed Focus: 19.6 cm - ∞ - F-number: 2.2

Last updated: July 14, 2020 13:15

What is the Resolution of the Calculated Depth Map on OAK-D?

1280x720 The grayscale global shutter models (based on the OV9282) actually support up to 1280x800 resolution but the depth map is actually center-cropped from the middle 720 pixels of the 800 total vertical pixels.

Last updated: July 14, 2020 13:15

Are the left and right cameras grayscale?

Yes. And global shutter. Here is a quick overview of the left and right camera specifications:

Stereo (left and right) Camera Specifications: - OV9282 Image Sensor - Synchronized Global Shutter - Excellent Low-light - 1280 x 720 depth - 83 DFOV° - 71.8 HFOV° - Lens Size: 1/2.3 inch - Fixed Focus: 19.6 cm - ∞ - F-number: 2.2

Last updated: July 14, 2020 16:17

Is the raw image data from the left and right cameras available over the USB interface, or are they only used for depth perception?

Yes. The raw left and right camera data is available at full frame rate. And it can be requested in parallel to the depth or disparity output.

The left/right can also be optionally encoded with h.264 or h.265 encoding at full frame rate as well.

Last updated: July 14, 2020 16:17

If the L/R image data is available, are the left and right cameras calibrated to the 4K RGB image, such that color could be applied to the L/R images, or would that be new work?

The left/right cameras are factory calibrated (a 2-camera calibration) but they are not at this time calibrated to the RGB camera.

We have on our to-do list to investigate doing a 3-color calibration (i.e. to include the color camera) but we have not investigated this so we don’t know how reasonable it is.

The color camera is auto-focus, so this has some impact on calibration. We imagine we’d need to calibrate it for the hyperfocal distance (approximately 1.8 meters for the color auto-focus camera).

We will investigate if this is readily doable and then do it if it is feasible, storing the calibration to the onboard EEPROM (which is already used to store the 2-camera calibration for the left/right cameras).

Last updated: July 14, 2020 16:17

It says that "The requirements are Python and OpenCV", which is a bit unusual for a device like a camera. Will it be possible to just connect the device to any machine through the USB-C connector and read it as a normal webcam device?

So the reason for the C++/Python API is because OAK-1 and OAK-D can do a ton of things which a normal camera can’t - and so the UVC (USB Video Class) does not have support for them.

For example, OAK and OAK-D can return purely structured data and no videos at all. For example, where all the strawberry are in physical space, their approximate ripeness, and whatever else you want the neural model to do.

So in this case it’s just metadata, structured data about the world. So having a webcam interface there would be cumbersome (and likely not possible).

And OAK can output a whole slew of other things that UVC doesn’t support, all configurable through the API… combinations of metadata, region-of-interest outputs, raw frames, and encoded frames.

And on getting raw information from the sensor, that is supported through the OAK API. As it stands now the max throughput seems to be around 700MB/s, which is enough to do 12 million pixels (12MP) at ~41FPS.

Now… we can also likely support a webcam mode. But in this case the host computer would need to issue the command to OAK-1 and/or OAK-D to enter UVC-mode… and then OAK-1 would show up as 1 webcam and OAK-D would show up as 3.

We have done some initial proof-of-concepts on this but there is some technical risk (some computers get upset if you change your USB class w/out actually physically unplugging/replugging the device, for example).

Last updated: July 14, 2020 16:46

What are the power consumption of the two devices while operational?

It heavily depends on the modes of operation, but OAK-1 is about 2.5W and OAK-D is about 4W.

Last updated: July 14, 2020 16:48

Do I need to install OpenVINO or any other API? Or can I just use the camera with OpenCV? Will there be documentation on how to interface directly to the camera? Is it a UVC camera?

OpenCV in conjunction with the OAK API is all that’s required.

OpenVINO is optional and is only needed if you would like to convert neural models on your local machine. We will provide a cloud endpoint which can automatically convert models for you as well.

Yes, there will be (updated) documentation on how to interface directly to the camera. It is (and will be) configurable via a JSON config that is passed to OAK-1 and OAK-D. And there is (and will be) a command-line interface for quickly prototyping/trying out various options.

More details on the existing command structure is below, which links to the current JSON config (which will improve/change w/ multiple efforts we have not yet open-sourced yet as they’re in testing): https://docs.luxonis.com/faq/#how-do-i-display-multiple-streams

In terms of supporting UVC, we have done some initial proof-of-concepts on this but there is some technical risk (some computers get upset if you change your USB class w/out actually physically unplugging/replugging the device, for example).

And in this case the host computer would need to issue the command to OAK-1 and/or OAK-D to enter UVC-mode… and then OAK-1 would show up as 1 webcam and OAK-D would show up as 3.

Last updated: July 14, 2020 16:55

Can I make my own case for OAK-D?

Yes. And you can start now. You can find the MIT-licensed design files here: https://github.com/luxonis/depthai-hardware/tree/master/BW1098OBC_DepthAI_USB3C

(The part-number for OAK-D is BW1098OBC.)

And there exists in there already a STEP file for mounting the OAK-D to a GoPro mount. And speaking of which… this is a fantastic kit when used with that GoPro mount to mount OAK-D to nearly anything: https://www.amazon.com/gp/product/B01171X0UW/ref=ppx_yo_dt_b_search_asin_title?ie=UTF8&psc=1

Last updated: July 14, 2020 19:37

Can OAK-D be powered off of USB3?

Yes. The OAK-D is designed with an ideal-diode-or between the barrel jack 5V power and the USB power.

The reason the barrel jack is on there (and the power supply is included) is that some USB2 devices can only provide 2.5W (and technically, USB2 devices are only spec’ed to provide 2.5W).

USB3 devices can provide 900mA at 5V though, and so OAK-D can be powered by USB3 devices.

Last updated: July 14, 2020 19:44

Would it be possible to shut down the stereo cameras (maybe over the API) to bring down the consumption when not using depth perception mode?

Yes, it will be possible to shut down the stereo cameras when configuring the device via the API (including turning them off). We have power control on the cameras for this reason (it puts them in standby, which is effectively no power).

Last updated: July 14, 2020 19:52

Is Python Required? Can I use C++ Instead?

Python is not required. The OAK API is written in C++ with pybind11 for Python bindings, and so C++ is directly supported as well.

Last updated: July 14, 2020 20:12

What is the node based editor you show based on? Is it based on an existing framework?

We discovered PyFlowOpenCv, which is the coolest thing, only after having turned our own graphical editor and discovering PyFlow was doing all that we wanted while looking way cooler!

So our pipeline builder is a modified version of PyFlowOpenCv (https://github.com/wonderworks-software/PyFlowOpenCv) designed to work seamlessly with OAK-D’s CV and AI acceleration.

And a cool potential (although not fully explored) is that this same node-based editor could then work for OAK-D functions at the same time as host-side additional OpenCV functions, if such a combination is of interest.

Last updated: July 15, 2020 11:38

What are the size, weight, and power requirements of the OAK-D?

The source design files (including dimension drawings, STEP file, a reference mount design, and the source Altium projects) are here: https://github.com/luxonis/depthai-hardware/tree/master/BW1098OBC_DepthAI_USB3C

And on that page the dimensions are here: https://github.com/luxonis/depthai-hardware/tree/master/BW1098OBC_DepthAI_USB3C#board-layout–dimensions

The power use is up to 4W (0.8A at 5V) when running many things in parallel.

And the weight is 1.5 oz. including heatsink.

Last updated: July 15, 2020 13:27

What is the mass of OAK-1 and OAK-D?

Including the heatsinks: OAK-1: ~1.0 oz. (29 grams) OAK-D: ~1.5 oz. (43 grams)

Last updated: July 15, 2020 13:57

What of the 3A Controls (Auto-Focus, Auto-Exposure, Auto-White-Balance) Will be Exposed?

We plan to implement 3A controls from the host (see https://github.com/luxonis/depthai/issues/154). As it stands now the auto-focus modes are already controllable (https://docs.luxonis.com/faq/#autofocus) via the OAK API.

So this would allow you to focus based on the hosts discretion (so just whenever the host tells OAK to do so).

Last updated: July 15, 2020 15:12

Do you have any full-resolution still frame examples from your board?

We do not have full 12MP stills (although we’ll probably go take some soon now that you ask), but we do have 8MP (i.e. 4K) video recording examples.

Here are some examples:

https://www.youtube.com/watch?v=vEq7LtGbECs&feature=youtu.be

https://www.youtube.com/watch?v=XRFWX1tBC5o&feature=youtu.be

Make sure to select 2160p in the Youtube viewer (the default is 1080p, which is of course 1/4 the resolution). And on the second one there was some dust on the lens from the unit sitting on my desk lens-up for weeks… so it has some funny lens flares as a result when the sun catches it directly.

And sorry there aren’t more interesting examples… it’s a bit hard to film cool stuff during a Pandemic.

Last updated: July 15, 2020 15:12

What Interfaces are on the OAK System on Module?

2x 2-lane MIPI Camera Interface 1x 4-lane MIPI Camera Interface Quad SPI with 2 dedicated chip-selects I²C UART USB2 USB3 Several GPIO (1.8 V and 3.3V) Supports off-board SD Card On-board NOR boot Flash (optional) On-board EEPROM (optional) All power regulation, clock generation, etc. on module All connectivity through single 100-pin connector (DF40C-100DP-0.4V(51))

Last updated: July 15, 2020 15:16

Will I be able to learn the requirements to operate an OAK-D product and unleash it's full potential from scratch?

Yes. For sure. So we have a process for converting TensorFlow models to run directly on the device. It’s similar to converting for NVIDIA acceleration for example, but a bit easier.

In fact the training tutorial here is in TensorFlow and then converts for running on the device at the end: https://docs.luxonis.com/tutorials/object_det_mnssv2_training/

And for getting up and running with pre-trained models, see here: See basic model running here: https://docs.luxonis.com/tutorials/openvino_model_zoo_pretrained_model/

Last updated: July 22, 2020 13:27

Do I need a RaspBerry Pi?

No need for Raspberry Pi, OAK works on anything that runs OpenCV.

Last updated: July 22, 2020 13:27

Will you be releasing a course or tutorial for this product to teach from scratch?

Yes, in fact several of these getting started guides are out now.

Running pre-trained models: https://docs.luxonis.com/tutorials/openvino_model_zoo_pretrained_model/ Training (or converting) your own models: https://docs.luxonis.com/tutorials/object_det_mnssv2_training/

Last updated: July 22, 2020 13:27

Description says software as well as hardware is opensource, but I can't find any related source anywhere, could you please provide a reference of the same. Where are they?

Thanks for the interest and great question!

Yes, see here: https://docs.luxonis.com/faq/#githubs

- https://github.com/luxonis/depthai-hardware - DepthAI hardware designs themselves.

- https://github.com/luxonis/depthai - Python Interface and Examples

- https://github.com/luxonis/depthai-api - C++ Core and C++ API

- https://github.com/luxonis/depthai-ml-training - Online AI/ML training leveraging Google Colab (so it’s free)

- https://github.com/luxonis/depthai-experiments - Experiments showing how to use DepthAI.

That lists all the Githubs. We are going these under OpenCV now that this KickStarter is public - we are just doing refactoring first so that it cleanly integrates to not clutter the OpenCV repository.

Last updated: July 22, 2020 13:56

Do OAK-1 and OAK-D have Wifi embedded?

No, but we are working on a version of OAK-D that has built-in WiFi and BT and has 1-minute setup with AWS-IoT (and other cloud MQTT solutions). See here for details and/or subscribing to updates: https://github.com/luxonis/depthai-hardware/issues/10

Last updated: July 22, 2020 14:07

What is DepthAI? (and megaAI) and how are they related to the OpenCV AI Kit (OAK)?

The background here is Dr. Mallick (CEO OpenCV) had reached out very early on to Luxonis, who was building these systems, after he saw the value in them. Actually, this was all prompted by a PyImageSearch tweet about DepthAI that Dr. Mallick saw, this one: https://twitter.com/PyImageSearch/status/1192448356913688576.

Dr. Mallick approached Luxonis with the great idea to make this the OpenCV AI Kit and Luxonis loved the idea. So together Luxonis and OpenCV have been working towards that since (now for quite a long time).

We wanted to mature the platform, and get it in a good position to launch this KickStarter prior to our big launch… so behind the scenes we’ve been developing it a DepthAI/megaAI before doing our big launch here as the OpenCV AI Kit.

This has allowed us to get feedback on the required modes, most valuable hardware permutations, mature the firmware, get documentation ready, etc. prior to launching.

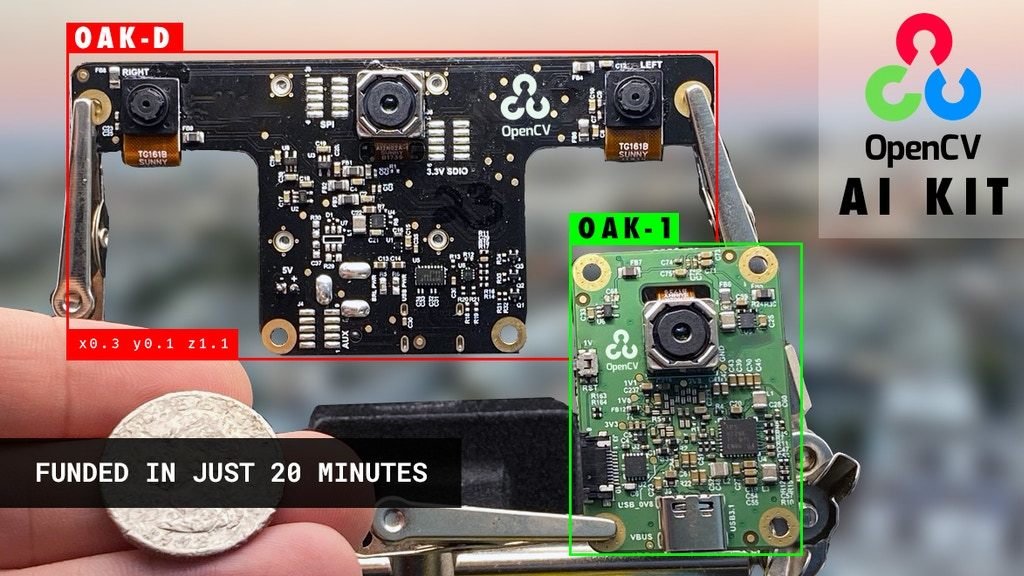

So the OpenCV AI Kit is based on DepthAI (PN: BW1098OBC = OAK-D) and megaAI (PN: BW1093 = OAK-1).

We are in the process of moving the Open Source code to the OpenCV Github. We are doing some additional refactoring, and behind-the-scenes OpenCV work which will make this integration cleaner.

Last updated: July 23, 2020 11:07

Who is Luxonis? What is their relationship with OpenCV?

Luxonis is behind writing the core capabilities and developing the hardware of the OpenCV AI Kit. Dr. Mallick approached Luxonis with the great idea to make this the OpenCV AI Kit and Luxonis loved the idea. So together Luxonis and OpenCV have been working towards that since (now for quite a long time).

Luxonis is also responsible for production of all of the OpenCV AI Kit and fulfillment of this KickStarter.

Last updated: July 23, 2020 11:08

Will OAK eventually support Micropython?

Super-great question. So to add additional flexibility to the pipeline builder, we will be supporting executing Micropython code directly on the Myriad X as a block in the pipeline.

So this will allow folks to set up their own rules between CV/AI nodes, and also write their own protocol implementations on the platform. Say for SPI, UART, or I2C interfacing with their own existing systems and protocols.

A note on UART/SPI/I2C support: Reach out to us if this of interest, as there is a slightly different System on Module that affords operation without USB. It has a larger onboard flash memory, and slightly different hardware configuration to boot from flash instead of over USB. All the models in this KickStarter require USB to operate.

This may not ship at the same time as the KickStarter (hence it’s not listed in the features on the campaign page), but it is a feature we are super excited about, so the potential exists. And worst-case this should exist early 2021.

Last updated: July 25, 2020 09:26

Does OAK work with the Jetson Series (Jetson Tx1, Jetson Tx2, Jetson Nano, Jetson NX, etc.)

Yes.

OAK works cleanly on the Jetson series. And we actually have many early users who are using OAK with I think every Jetson series.

We even did a quick stab at making OAK work with OpenDataCam (which is Jetson-based). See here: https://twitter.com/luxonis/status/1272652967712186368?s=20

And here it is running on the Jetson Tx2 on my desk. :-)

https://photos.app.goo.gl/bq5xAeXS3PWEdEYH7

Last updated: July 25, 2020 11:51

What are the specifications of the processor? And what are the interfaces on the System on Module?

The Myriad X specifications are here: https://newsroom.intel.com/wp-content/uploads/sites/11/2017/08/movidius-myriad-xvpu-product-brief.pdf

And this is a good reference as well, although there’s a typo on there… 4K/60 encoding of any kind is not supported. The max is 4k/30. https://www.anandtech.com/show/11771/intel-announces-movidius-myriad-x-vpu

And more information on the system on module are here: https://docs.luxonis.com/products/bw1099/#setup

Last updated: July 30, 2020 20:19

What will be precision and accuracy for depth measurement in OAK-D? Will it able to measure dimension of object in 3D?

So OAK-D uses passive stereo depth (no active illumination), so its accuracy requires adequate lighting. The typical accuracy is approximately 3% of distance, but varies depending on object/actual distance/etc.

It’s worth noting that OAK-D was not architected with 3D scanning or 3D recreation of objects in mind - but in terms of measuring dimensions of objects in 3D space, yes, this is definitely possible.

See here for maximum and minimum distances of OAK-D, keeping in mind the part number for OAK-D is BW1098OBC: https://docs.luxonis.com/faq/#mindepths

Last updated: August 06, 2020 08:23

Why Would I want PoE?

So PoE is mainly about cabling convenience. It provides power and data at up to 100 meters (328.1 feet) at 1gbps.

So it’s MUCH slower than USB3, which is 10gbps, but it can go MUCH longer distances and provides 15W of power (so 3.3x based USB3 power at 5V, and 6x that of standard USB2 devices).

For just using OAK with a computer, USB is more convenient though. So PoE is useful if you already have a PoE switch (like UniFi’s awesome line of PoE switches: https://unifi-network.ui.com/switching).

So unless you were not already specifically wanting PoE, we’d recommend sticking with USB. It’s easier if you don’t already have PoE switches/etc.

Unless of course you’re felling adventurous - and then get both/all of them!

Last updated: August 06, 2020 20:40

What are the maximum FrameRates? Can I control shutter speed? What are supported resolutions? What is the latency?

Our fastest supported framerate right now is 60FPS, which is on the grayscale cameras. The max theoretical on the grayscale is 120FPS but we have not yet implemented support for that. The max theoretical for the RGB camera is 60FPS, although only 30FPS (at 4K) is currently supported through the API. It is likely if faster framerate is a popular request that 60FPS at 1920x1080 will be supported from color.

By default the ISP controls the shutter speed (i.e. exposure time, there is no physical shutter). But we will be exposing the capability to lock this and set this manually for both RGB and grayscale cameras.

The current supported resolutions are: RGB: 4K (3840 × 2160) at 30FPS 1080p (1920 x 1080) at 30FPS

Grayscale: 1280x800 at 1 to 60FPS (programmable at 1FPS increments) 1280x720 at 1 to 60FPS (programmable at 1FPS increments) 720x400 at 1 to 60FPS (programmable at 1FPS increments)

And the approximate latencies over USB are here: https://docs.luxonis.com/faq/#what-are-the-stream-latencies

It’s worth noting that those were taken using an iPhone recording slow-motion on a monitor off of an iMac. So the latencies listed there include the display latency and the host-side latency to receive the USB information, process it, and then display it. So the actual OAK latency is likely well below those listed there.

Last updated: August 07, 2020 09:06

What benefits does the Wi-fi/Bluetooth have over the traditional USB, can can the that module also use the USB cord if needed?

It’s mainly for allowing standalone operation without needing a host. The WiFi+BT version will have built-in ESP32 for connecting easily with all sorts of things over WiFi or BT, leveraging the huge open source community and use-case examples around the ESP32. For example using w/ AWS-IoT is then very easy, so is interacting directly with smartphones over BT/etc.

More details here on the basis of this design are here:

https://github.com/luxonis/depthai-hardware/issues/10

And yes, USB will be an option as well. So the WiFi + BT version can be used over USB just like a standard OAK-D.

The main trade is time. OAK-D USB is mature and already in use, whereas the WiFi+BT effort is fairly early-on, so is expected to ship in March instead of the USB version of OAK-D which will ship in December.

And as more background, the idea for BT/WiFi model came actually from our team wanting to build an easier-to-wear standalone visual-assistance device for the visually impaired and fully blind.

So more on the background and architecture decisions are here:

https://github.com/luxonis/depthai-hardware/issues/9

And then that flowed into using the ESP32 for the WiFi + BT as it also has a TON of AWS-IoT support and other super-easy-to-use examples for getting data off, and even can run Arduino. So it makes if very easy to get all sorts of examples like you mention (transferring data, commanding the system, etc.) going over WiFi/BT locally - to a smartphone or another computer - or to the cloud through AWS-IoT, Azure, etc.

More details on this design are below:

https://github.com/luxonis/depthai-hardware/issues/10

It will be the basis for the WiFi + BT version of OAK-D.

And this design also serves as a reference for other pure-embedded applications. The communication is SDIO (Quad SPI) which allows fast transfer and works with a ton of chipsets (including ones that don’t have an OS). More background on that is here:

https://github.com/luxonis/depthai/issues/140

So yes, you can get data out without connecting to USB.

And the main use is this for these sorts of standalone use-cases.

Last updated: August 12, 2020 11:29

Will the PoE Port also provide Data or just power? How would the communication look like?

The PoE port is both data and power. The PHY rate of the Ethernet port is gigabit, but we have not yet tested actual throughput yet.

The communication will be very similar (if not identical) to the end user, as the lower-level access (USB or Ethernet) will be handled by our (open source) API. So if you choose to just use the API, you will see very little to know difference.

Last updated: August 12, 2020 19:43

Will the PoE Version still have the USB Port for Fallback / Backup?

Yes. The PoE variant will be usable with USB communication or Ethernet communication (but not at the same time… it will likely be either/or thing).

Last updated: August 12, 2020 19:51

Is the PoE-Port fast enough for all features or will there be any drawbacks?

Yes, it can do all the main value-add features. The thing it can’t do is stream all the uncompressed streams at once. So USB3 is capable of streaming 12MP RAW/uncompressed with OAK at ~41FPS (see here: https://docs.luxonis.com/faq/#maxfps).

So over Ethernet the absolute maximum throughput would be 12MP RAW/uncompressed at ~4 FPS. Keep in mind that in most cases you don’t need nearly this much data. And OAK can encode 4K/30FPS, which is only 25 mbps (well under the 1,000mbps PHY limit of Ethernet).

So in all sorts of cases, Ethernet will be fast enough. But if for whatever reason you need to record as much RAW data as possible, Ethernet will be limiting.

Last updated: August 12, 2020 19:51

Does OAK-D-POE come with WiFi or BT?

No.

OAK-D-POE does not have WiFi.

OAK-D-WIFI does not have POE.

We do not have plans to make an OAK-D-POE-WIFI, but since the designs are open-source, someone could take OAK-D-WIFI and OAK-D-POE and make a OAK-D-WIFI-POE design.

Here are the respective projects so far: OAK-D-POE: https://github.com/luxonis/depthai-hardware/tree/master/BW2098POE_PoE_Board

OAK-D-WIFI: https://github.com/luxonis/depthai-hardware/issues/10#issuecomment-673237881

Note that we haven’t open sourced the WiFi design yet because we have not yet gotten back the full WiFi design and we always validate the designs, check for errors, fix and re-verify before releasing.

As of this writing the bare OAK-D-WIFI initial prototype boards are back, but not fully tested

And in terms of OAK-D vs. OAK-D-WiFi, the trade is time: OAK-D: Ships in December OAK-D-WIFI: Ships in March

And in terms of cost, the WiFi/BT solution being used is the ESP32, which is a fantastically-inexpensive part. :-)

Last updated: September 04, 2020 13:27

Does OAK-D-WIFI come with POE?

No.

OAK-D-WIFI does not have POE.

OAK-D-POE does not have WiFi.

We do not have plans to make an OAK-D-POE-WIFI, but since the designs are open-source, someone could take OAK-D-WIFI and OAK-D-POE and make a OAK-D-WIFI-POE design.

Here are the respective projects so far: OAK-D-POE: https://github.com/luxonis/depthai-hardware/tree/master/BW2098POE_PoE_Board

OAK-D-WIFI: https://github.com/luxonis/depthai-hardware/issues/10#issuecomment-673237881

Note that we haven’t open sourced the WiFi design yet because we have not yet gotten back the full WiFi design and we always validate the designs, check for errors, fix and re-verify before releasing.

As of this writing the bare OAK-D-WIFI initial prototype boards are back, but not fully tested

And in terms of OAK-D vs. OAK-D-WiFi, the trade is time: OAK-D: Ships in December OAK-D-WIFI: Ships in March

And in terms of cost, the WiFi/BT solution being used is the ESP32, which is a fantastically-inexpensive part. :-)

Last updated: September 04, 2020 13:28

Does OAK-D come with POE?

No.

You can however upgrade to OAK-D-POE for an additional $50 to have POE support.

- OAK-D ships in December.

- OAK-D-POE ships in March.

Last updated: September 04, 2020 13:38

Does OAK-D come with WiFi?

No.

You can upgrade to OAK-D-WIFI to have WiFi capability.

- OAK-D ships in December

- OAK-D-WIFI ships in March

Last updated: September 04, 2020 13:38

Does OAK-D come with BlueTooth (BT)?

No.

You can upgrade to OAK-D-WIFI to have BlueTooth (BT) support though.

- OAK-D ships in December.

- OAK-D-WIFI ships in March.

Last updated: September 04, 2020 13:39

Does OAK-1 come with POE?

No.

But you can upgrade to OAK-1-POE for and additional $50 for POE support.

- OAK-1 ships in December.

- OAK-1-POE ships in March.

Last updated: September 04, 2020 13:46

Does OAK-1 come with WiFi?

No.

Last updated: September 04, 2020 13:45

Does OAK-1 come with BlueTooth (BT)?

No.

Last updated: September 04, 2020 13:42

Does OAK-1-POE come with WiFi?

No.

Last updated: September 04, 2020 13:51

Does OAK-1-POE come with BlueTooth (BT)?

No.

Last updated: September 04, 2020 13:51

Does OAK-1-POE come with POE?

Yes. It has POE support soldered directly onboard.

Last updated: September 04, 2020 14:50

What is BackerKit?

BackerKit is a service that crowdfunded project creators use to keep track of hundreds to tens of thousands of backers—from shipping details, pledge levels, preferences and quantities, whether they have paid or had their card declined, special notes, and everything in between!

The BackerKit software and support team is independent from the campaign’s project team—BackerKit does not handle the actual reward shipping. For more information about the preparation or delivery status of your rewards, please check the project's updates page.

How does BackerKit work?

After the campaign ends, the project creator will send you an email with a unique link to your survey. You can check out a walkthrough of the process here.

I never received my invitation. How do I complete the survey?

The most common reasons for not receiving a survey email is that you may be checking an email inbox different from the email address you used to sign up with Kickstarter, Indiegogo or Tilt Pro account, or it may be caught in your spam filter.

Confirm that the email address you are searching matches the email address tied to your Kickstarter, Indiegogo, or Tilt Pro account. If that doesn’t work, then try checking your spam, junk or promotions folders. You can also search for "backerkit” in your inbox.

To resend the survey to yourself, visit the project page and input the email address associated with your Kickstarter, Indiegogo or Tilt Pro account.

How do I update my shipping address?

BackerKit allows you to update your shipping address until the shipping addresses are locked by the project creator. To update your address, go back to your BackerKit survey by inputting your email here.

When will my order be shipped, charged or locked?

That is handled directly by the project creator. BackerKit functions independently of the project itself, so we do not have control of their physical shipping timeline. If you want to check on the project’s status, we recommend reading over the project's updates page.

I completed the survey, but haven't received my rewards yet. When will they arrive?

As BackerKit does not actually handle any rewards or shipping, the best way to stay updated on the shipping timeline would be to check out the project's updates page.